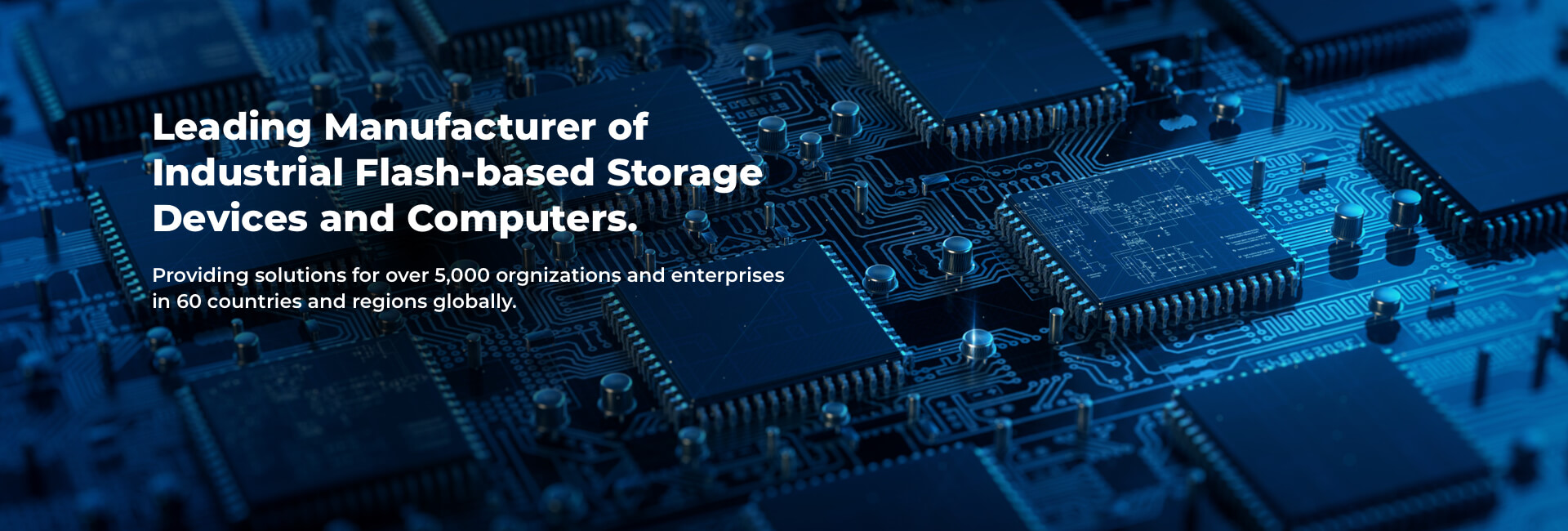

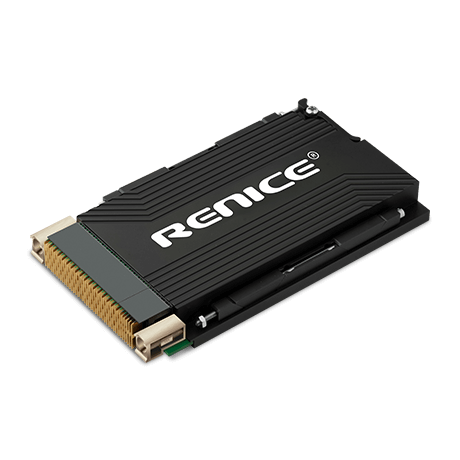

Renice® SSDs

Renice® Solutions

Renice develops and manufactures extreme performance, high reliability SSD products for various industries.

Flash-based Storage Solutions

-

Independent research and development Controller, enables highest reliability and data security with functions

-

Powerful ECC capability and exclusive Non-Balance Wear Leveling TechnologyTM, extended lifespan

-

Unique No TRIM-Full Speed technology ensures Renice SSDs maintain stable performance.

-

Customized functions like Physical destruction, Logical destruction, AES encryption can meet

-

The industrial grade materials and strict production process ensure low defects and extended

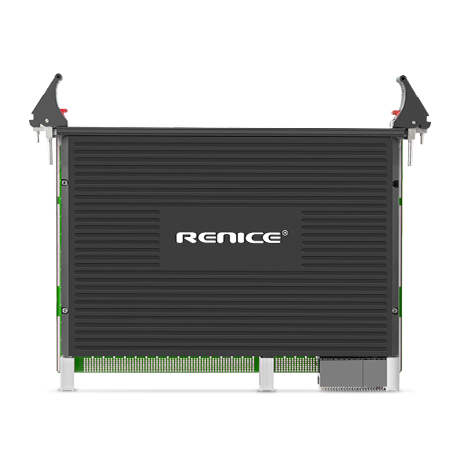

Rugged Computer Solutions

-

High reliability and diversified interface, suitable for compatible industrial computers and computing center

-

High-performance computing and high speed real-time bus

-

Support Air cool and Conductive cool

-

Extend Operating Temperature: -40°C~+55°C

-

Blockchain Solutions – IPFS Database Server Center

Renice® Brand

-

12+ Years

Rich experience in independent research and development and manufacturing

-

20+ Industries

Delivered superior solutions for more than 20 industries

-

5000+ Customers

Served over 5,000 customers worldwide

-

2000W+ Shipments

Products shipments exceed 20 Million all over the world

-

1PPM+ Defective Rate

High strict quality control and constantly keep 1PPM

Renice® News Center

- Renice Product NewsMore

-

Remarkable breakthrough of MRD code technology brings highlight for information security

March 4, 2024

-

February 15

2023

Take A Tour Of Renice's New Office

-

October 20

2022

Learn More About Renice's History

-

July 4

2019

CCTV Column Conducted An Interview And Filed Filming Of Renice

-

July 3

2019

Renice BMC Intelligent Health Management Software Will Be Launched

- Storage Industry KnowledgeMore

-

Ultra High Speed CFexpress Card Type B for Digital Cameras

February 6, 2023

-

November 23

2022

Detail the S.M.A.R.T of SSD

-

November 10

2022

Root Cause Analysis & Test for NAND Flash Bit Error

-

November 7

2022

Renice Launches Ultra-High-Speed CFast 2.0 Memory Cards

-

November 3

2022

What happens when Industrial SSD encounters Rail Transit

Enterprise of China SSD National Standard Formulation

China National High-tech Enterprise

Designated Supplier of Wold Well-known Airline