Solid State Drive Bad Block Management Method

How Bad Blocks are generated? What kind of means does SSD use to discover and manage Bad Blocks? What are the problems with the Bad Block management strategy suggested by the manufacturer? What kind of management method will be better? Will formatting the hard drive cause the Bad Block Table to be lost? What are the security risks after the SSD is repaired? This article elaborates them one by one.

Overview

The Bad Block management design concept is related to SSD reliability and efficiency. The Bad Block management practices given by Nand Flash manufacturers may not be very reasonable. During product design, if some abnormal conditions are not considered carefully, it will often lead to some unexpected Bad Blocks.

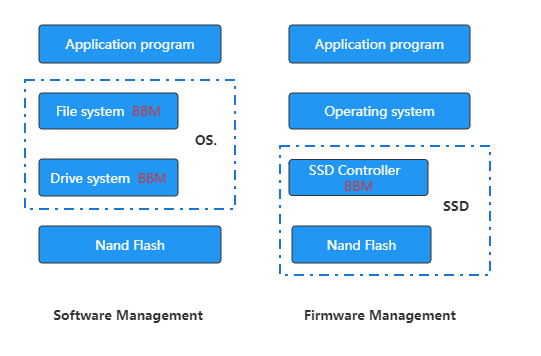

Who Manage Bad Block

For a dedicated flash file system, Bad Blocks are managed by a dedicated flash file system or a driver.

Three Types of Bad Blocks

1. Factory Bad Blocks (or initial Bad Blocks), that is, blocks that do not meet the manufacturer's standards or have been tested by the manufacturer and fail to meet the manufacturer's published standards, and have been identified as Bad Blocks by the manufacturer when they leave the factory. Some factory Bad Blocks can be erased, while others cannot;

The Ratio of Factory Bad Blocks in the Entire Device

But it seems that it is not an easy thing to guarantee 2%. Renice got a new sample from the factory, and the test Bad Block ratio was 2.55%, which exceeded 2%.

How to Determine Bad Blocks in SSD

1.Judgment Method of Factory Bad Blocks

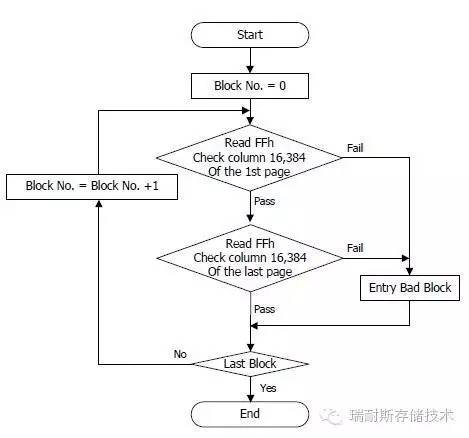

The scanning of Bad Blocks is basically to scan whether the byte corresponding to the address specified by the manufacturer has the FFh flag, and if there is no FFh, it is a Bad Block.

Quoting a figure in the Hynix datasheet to illustrate:

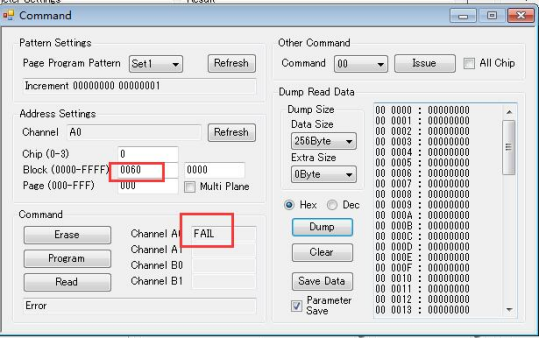

The test results are shown below. Of course, it may be true for factory Bad Blocks, but it may not be true for new Bad Blocks, otherwise it would be impossible to hide data through "Bad Blocks":

The manufacturer strongly recommends not to erase the Bad Block. Once the Bad Block flag is erased, it cannot be "recovered", and writing data on the Bad Block is risky.

2.Judgment Method for New Bad Blocks During Use

The new Bad Block is determined by the feedback result of the status register to determine whether the operation of the Nand Flash is successful. During Program or Erase, if the feedback of the status register fails, the SSD controller will list this block as a Bad Block.

Bad Block Management Methods

Bad Blocks are managed by building and updating the Bad Block Table (BBT). There is no unified specification and practice for the Bad Block Table. Some engineers use one table to manage factory Bad Blocks and new Bad Blocks, some engineers manage the two tables separately, and some engineers regard the initial Bad Blocks as a separate table. table, the factory Bad Blocks are added to the new Bad Blocks as another table.

Bad Block Skipping Strategy

Bad Block Replacement Strategy (recommended by Nand Flash manufacturer)

Bad Block Replacement refers to using good blocks in the reserved area to replace Bad Blocks generated during use. Assuming that an error occurs on the nth page during the program, then under the Bad Block Replacement strategy, the data from page0 to page(n-1) will be copied to the same position of the free Block (such as Block D) in the reserved area. , and then write the data of the nth page in the data register to page n in Block D.

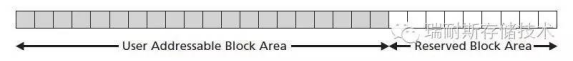

The manufacturer's suggestion is to divide the entire data area into two parts, one part is the user-visible area, which is used for normal data operations by the user, and the other part is the spare area specially prepared for replacing Bad Blocks, which is used to store data for replacing Bad Blocks and The Bad Block Table is kept, and the proportion of the spare area is 2% of the entire capacity.

1. Directly reserve 2% of the area for replacement of Bad Blocks, which will reduce the available capacity and waste space. At the same time, due to the small number of available blocks, the average number of available Bad Blocks is accelerated;

2. Assuming that the number of Bad Blocks in the available area exceeds At 2%, that is to say, all the reserved replacement areas have been replaced, and the resulting Bad Blocks will not be processed, and the SSD will face the end of its life.

Bad Block Replacement Strategy (the practice of some SSD manufacturers)

In fact, in the real product design, it is rare to see a separate 2% ratio as the Bad Block Replacement area. In general, the OP (Over Provision) area free block is used to replace the new block in the process of use. Take garbage collection as an example. When the garbage collection mechanism is running, the valid page data in the block that needs to be reclaimed is first moved to the free block, and then the Erase operation is performed on the block. It is assumed that the Erase status register is fed back at this time. Erase fails, the Bad Block management mechanism will update the block address to the new Bad Block list, and at the same time, write the valid data pages in the Bad Block to the Free Block in the OP area, update the Bad Block management table, and next time When writing data, skip the Bad Block directly to the next available block.

Security Risk of SSD Repair

For most SSD manufacturers that do not have controller technology, if the product is returned for repair, the usual practice is to replace the faulty device and then restart mass production. At this time, the new Bad Block list will be lost, and the new Bad Block Table will be lost. This means that there are already Bad Blocks in the Nand Flash that has not been replaced, and the operating system or sensitive data may be written to the Bad Block area, which may cause the user's operating system to crash. Even for a manufacturer with a controller control, whether the existing Bad Block list will be saved for the user depends on the attitude of the user facing the manufacturer.

Will Bad Block Production Affect SSD's Read/Write Performance and Stability

The factory Bad Blocks will be isolated on the bitline, so it will not affect the erasing speed of other blocks. However, if there are enough new Bad Blocks in the entire SSD, the number of available blocks on the entire disk will decrease, which will lead to an increase in the number of garbage collections. , the reduction of the OP capacity will seriously affect the efficiency of garbage collection. Therefore, the increase of Bad Blocks to a certain level will affect the performance stability of the SSD, especially when the SSD is continuously written. Because the system performs garbage collection, it will lead to As performance decreases, the SSD performance curve will fluctuate greatly.